Data Independent Acquisition (DIA) Profiling

DIA is now our Default for Quantitative proteomic profiling. It's much better than our older Label free methods we have used in teh past and is much cheaper and faster than TMT (see below)

Pros

- Can be very inexpensive (< 50 / sample)

- Good proteome Depth!

- Sensitive (down to single cell level is possible)

- Fast Turn around time if you do the sample prep

- Data analysis can be easier than other methods

Cons

- Does not work well for some esoteric PTM's

- Sometimes does not work well for very complex multispecies proteomes

For More info see this presentation

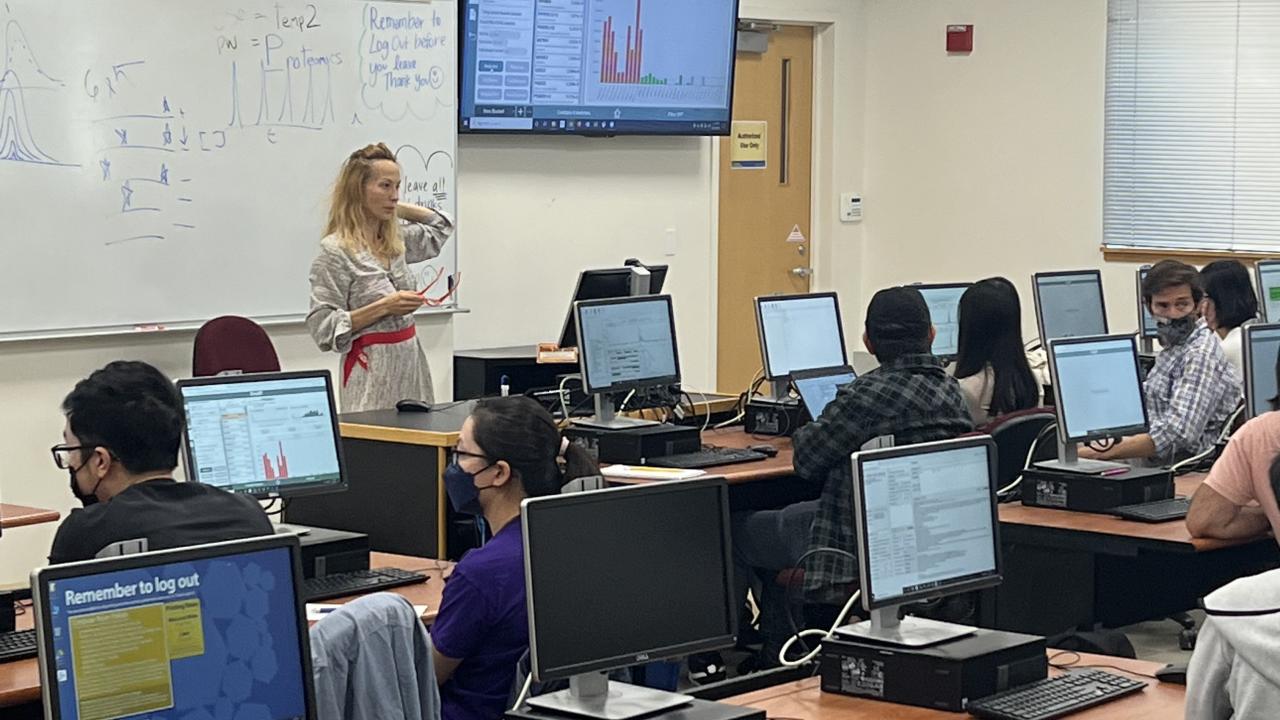

Why We use DIA at the UC Davis Proteomics Core

Some of our recent Example Publications

Mammalian hybrid pre-autophagosomal structure HyPAS generates autophagosomes Published in Cell

Stress granules and mTOR are regulated by membrane atg8ylation during lysosomal damage

Interactomic analysis reveals a homeostatic role for the HIV restriction factor TRIM5α in mitophagy

TMT-labeled Profiling

Still generates really good data!

Pros

- Good quantitative Data

- Good proteome Depth with peptide Fractionation

Cons

- Expensive!

- Optimally should be analyzed on our most expensive mass spectrometer

- Turn around time is long if you want us to do the labeling

TMT is a reporter ion isotope labeled balanced set of tag’s (currently 18) .

In summary:

- digest proteins into peptides,

- label with TMT, which labels free amines (K and N-term)

- Mix your samples together (create a multiplex)

- Fractionate your samples using high pH fractionation if your sample is very complex (lysate for example)

- Analyze your peptide fractions using SPS MS3 on our Fusion Lumos

Some of our Example Publications

Proteome analysis of walnut bacterial blight disease

Here is a presentation of a dataset we did recently. The author of the above publication helps us with the analysis. Make sure you read the notes as most of the detailed info is located there.

Extended_TMT_multiplexing_analysis (1).pptx